SDRAM vs. DDR vs. DRAM: Are SDRAM, DDR, and DRAM Memory ICs the Same?

Memory is a critical component in any computing device, spanning from smartphones to supercomputers. These devices use a variety of volatile and non-volatile internal storage units. Memory enables the temporary storage of data and instructions actively used by the CPU. With memory, computers would be able to perform tasks efficiently, constantly retrieving data from slower storage devices. Modern computers use several memory types, each with unique characteristics and advantages. Synchronous Dynamic Random-Access Memory (SDRAM) synchronizes with the CPU clock speed, enabling faster data access and transfer rates compared to asynchronous DRAM. Double Data Rate (DDR) and Dynamic Random-Access Memory (DRAM) are also common in computing systems. DRAM, in particular, is popular due to its cost-effectiveness compared to Static Random-Access Memory (SRAM).

Understanding the distinctions between SDRAM, DDR, and DRAM is crucial when upgrading or building a computer system. Each type of memory has specific strengths and weaknesses, making them suitable for different applications and computing needs. This article explores the differences between SDRAM, DDR, and DRAM memory types, providing a comprehensive understanding to help you choose the right memory for your computing requirements.

What is SDRAM?

SDRAM, short for Synchronous Dynamic Random Access Memory, is a type of computer memory that is synchronized with the system bus, enabling faster data transfer rates compared to earlier RAM types. It falls under the category of dynamic random access memory (DRAM) and is commonly found in most computers, offering faster speeds than regular DRAM.

SDRAM's operational concept was introduced in the 1990s to address the issue of processors having an asynchronous interface. This setup led to delayed input control signals due to the time taken for signals to travel across the semiconductor pathways. In contrast, SDRAM integrated circuits use an external clock signal to synchronize their operation with the external pin interface.

The synchronous interface of SDRAM works in tandem with the system bus, efficiently transmitting information from the CPU to the memory controller hub. This synchronization leads to quick responses that align with the system bus, contributing significantly to SDRAM's widespread adoption in various computer devices.

Compared to traditional asynchronous DRAMs, SDRAMs offer superior data transfer rates and concurrency. Additionally, SDRAMs boast a simple design at a low cost, which is advantageous for manufacturers. These benefits have cemented SDRAM as a popular and preferred choice in the computer memory market, particularly for RAM.

What is DDR?

Double Data Rate (DDR) is a memory technology that enhances performance in computers and electronic devices. Commonly used in personal computers and servers, DDR accelerates data transfer between memory and the processor. DDR, or DDR SDRAM (Synchronous Dynamic Random Access Memory), synchronizes memory with the system clock, enabling random access to any memory location. DDR SDRAM operates at double the rate by utilizing both the rising and falling edges of the clock signal. The term DDR does not denote speed but signifies the number of data transmissions per clock cycle, allowing DDR to transfer data twice per cycle.

In computer systems, DDR facilitates data transmission between the CPU and the north bridge. Typically, DDR memory clocks at a minimum of 200 MHz. DDR SDRAM gained popularity due to its cost-effectiveness, doubled data transfer rate, and low power consumption. As technology advances, the need for faster and more extensive memory grows to manage large data volumes efficiently. DDR has evolved to meet these demands, significantly increasing storage density and speed while reducing costs.

What is DRAM?

DRAM, short for "dynamic random access memory," is a type of RAM (random access memory) commonly found in modern desktops and laptops. Invented by Robert Dennard in 1968 and introduced to the market by Intel® in the 1970s, DRAM is a key component of computer memory.

Dynamic Random Access Memory (DRAM) is pronounced as "dee-ram" and is built using capacitors to store individual bits of data for RAM. RAM, which stands for random access memory, allows for accessing any data element regardless of its position in a sequence, ensuring a constant access time. DRAM storage is the most cost-effective among memory types due to its optimal design of access transistors and capacitors, coupled with advancements in semiconductor processes. Consequently, DRAM is commonly used as the main memory in computers, surpassing the cost of SRAM alternatives.

Over the years, DRAM technology has undergone significant revisions to reduce cost-per-bit, increase clock rate, and decrease overall dimensions. These improvements include the introduction of smaller DRAM cells, synchronous DRAM architectures, and DDR topologies, making DRAM a crucial component of modern computing.

DRAM Cell Structure

A typical 3-transistor DRAM cell uses access transistors and a storage transistor to control the input capacitance of the storage transistor, switching it on (for bit value 1) or off (for bit value 0). These transistors are connected to read and write column and row lines, also known as bit lines and word lines, respectively. This arrangement enables both write and read operations with a single storage transistor.

In a traditional 3-transistor DRAM cell, a write command involves applying a voltage to the gate of the M1 access transistor, which charges the gate capacitance of M3. The write line is then driven low, causing the charge stored in the gate capacitance of M3 to dissipate slowly, hence the term "dynamic."

3-transistor DRAM cell (to the left) and a 1-transistor/1-capacitor DRAM cell (to the right)

Modern technologies often use a 1-transistor/1-capacitor (1T1C) memory cell for more densely packed memory chips. In this architecture, the CMOS gate is connected to the word line, while the source is connected to the bit line. A write command turns on the transistor's gate, sending current to the discrete storage capacitor. Reading involves sharing the charge stored in the capacitor with the bit line. However, this sharing process destroys the information in the DRAM cell, necessitating a rewrite (refresh) after each read operation. Typically, this refresh occurs every few milliseconds to compensate for charge leaks from the capacitor.

Asynchronous Transfer Mode (ATS) Switching

The complexity of DRAM technology is most evident in its hierarchical structure, particularly in managing arrays of thousands of cells for various operations such as writes, reads, and refreshes. Current DRAM technology uses multiplexed addressing, where the same address input pins serve for both row and column addresses, reducing space requirements and pin count.

Operations are coordinated by a Row Address Strobe (RAS) and a Column Access Strobe (CAS) clock. RAS validates the row address signal, while CAS validates column addresses. On the falling edge of RAS, the address on the DRAM address pins is latched into row address latches. Similarly, on the falling edge of CAS, addresses are latched into column address latches. Activating an entire row in the memory array allows reading (sensing) the information stored in the capacitors or charging/discharging the storage capacitors for writing.

This is achieved through various peripheral circuits, including row/column latches, decoders, drivers, and sense amplifiers. For 1T1C DRAM cells, a sense amplifier serves as a row buffer to prevent information loss in read cells (each read destroys the information). Sense amplifiers sense the storage capacitor's charge status and amplify a low-power signal to a full logic value (0 or 1). A selected row in memory cannot be accessed until the information is loaded and stored in the sense amplifiers. This leads to CAS latency if the desired row is not active when requested, requiring additional time.

In the first step, when RAS is low, all cells in a row are read by the sense amplifier, a relatively time-consuming process. Subsequently, the row is active for column access for read or write operations. The access time (read/write cycle time) for RAS is generally higher than CAS due to the sense amplification step. Asynchronous DRAM bus speeds typically are at most 66 MHz.

Address timing for asynchronous DRAM

SDRAM vs. DRAM Memory

SDRAM can operate in either synchronous or asynchronous modes. In synchronous mode, all operations (read, write, refresh) are synchronized with a system clock, which runs at the CPU's clock speed (around 133 MHz). This synchronous operation allows for much higher clock speeds (3x) compared to conventional DRAM. All actions to and from the SDRAM occur at the rising edge of a master clock, with typical clock rates for Single Data Rate (SDR) SDRAM at 100 and 133 MHz.

A key feature of SDRAM architectures is the division of memory into several equal-size sections called memory banks. These banks can execute access commands simultaneously, resulting in much higher speeds than average DRAM. While the basic core and operations of SDRAM remain the same as traditional DRAM (as shown in Figure 3), the synchronous interleaving involves a separate I/O command interface from the DRAM chip.

The enhanced speeds of SDRAMs largely stem from pipelining. While one bank undergoes pre-charging and access latency, another bank may be reading, ensuring the memory chip constantly outputs data. This architecture with multiple banks allows for concurrent access to different rows, further boosting performance.

Here is the SDRAM vs. RAM comparison table:

| Difference | DRAM | SDRAM |

|---|---|---|

| Inventor | Dr. Robert Dennard, IBM, 1967 | - |

| Cell Type | Single-transistor cell | - |

| Storage | Data stored on a capacitor | Data stored on a capacitor, requires regular refreshing |

| Synchronization | Asynchronous with computer's clock | Synchronized with computer's clock |

| Speed | Slower due to asynchronous operation | Faster due to pipelined processing |

| Popularity | Less popular | More popular |

SDRAM vs. DDR Memory

While the clock rate of Single Data Rate (SDR) SDRAM is adequate for many applications, it often falls short for multimedia applications. This limitation led to the development of Double Data Rate SDRAM (DDR SDRAM). DDR SDRAM can transfer data on both the rising and falling edges of the master clock, effectively doubling the data transfer rate per clock cycle compared to SDR SDRAM.

DDR achieves this by prefetching data, where the internal bus fetches two bits (words) of data simultaneously to burst two words of equal width on the I/O pins. This 2-bit prefetch doubles the data rate without increasing power consumption. DDR architectures also improve power efficiency, with DDR2 running at 2.5V, DDR3 at 1.5V to 1.65V, and DDR4 at 1.2V. These improvements are due to revisions in power management circuitry and smarter frequency management, making DDR modules more attractive for battery-powered devices like laptops.

Here is the SDRAM vs. DDR memory comparison table:

| Difference | SDRAM | DDR |

|---|---|---|

| Voltage | 3.3 Volts | 2.5 Volts (standard); 1.8 V (low voltage) |

| Speed | 66 MHz, 100 MHz, 133 MHz | 200 MHz, 266 MHz, 333 MHz, 400 MHz |

| Modules | 168-pin DIMM | 184-pin DIMM unbuffered registered; 200-pin SODIMM; 172-pin MicroDIMM |

| Clock Signal | Uses only the rising edge for data transfer | Transfers data on both rising and falling edges |

| Speed | Slower; typically operates at lower frequencies | Faster; operates at roughly twice the speed of SDRAM |

| Physical Specs | 168 pins, two notches at connector | 184 pins, single notch at connector |

| Clock Rates | Typically 133, 166, and 200 MHz | Typically 266, 333, and 400 MHz |

| Bandwidth | Represented by PC-2100, PC-2700, PC-3200 | Doubled bandwidth due to double data rate |

| Generations | Released in 1997 | DDR released in 2000; followed by DDR2, DDR3, DDR4, and DDR5 |

| Clock Speed | Needs to match motherboard compatibility | Needs to be synchronous with system bus |

| Release year | 1993 | 2000 |

| Data Strobes | Two-notches at the connector | Single-ended |

| Succeeded by | DDR (or DDR1) | DDR2 |

What Is the Difference Between DDR, DDR2, DDR3 and DDR4?

The later evolutions of DDR (DDR2, DDR3, DDR4) maintain the same underlying components and functionality as their predecessor, with the key difference being an increase in clock speed. For example, DDR2 RAM introduced a 2x clock multiplier to the DDR SDRAM interface, doubling data transfer speeds while keeping the bus speed the same. This is achieved by employing a '4-bit prefetch' from the memory array to the I/O buffer. Similarly, DDR3 modules prefetch 8 bits of data, and DDR4 modules prefetch 16 bits.

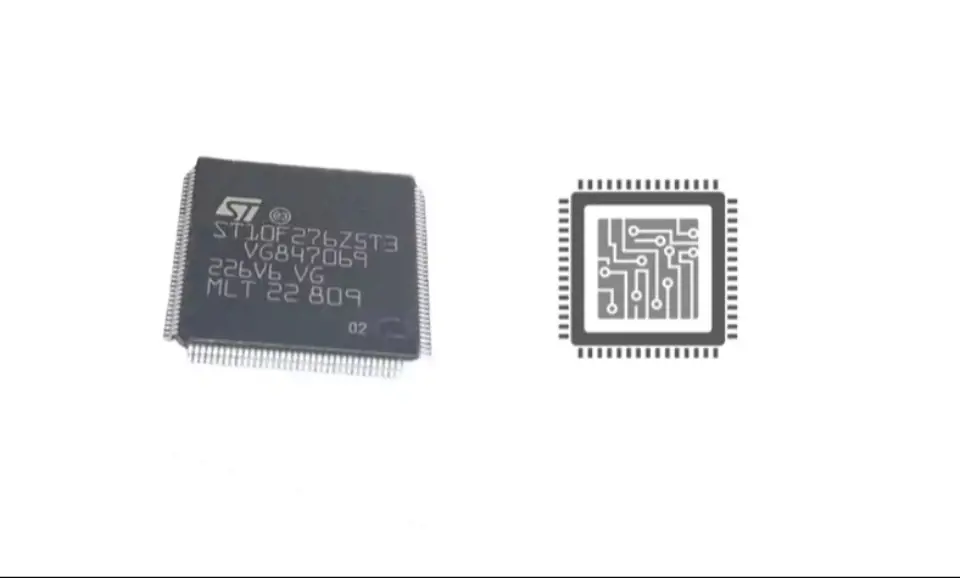

A DRAM memory array with SDRAM interface (to the right) and DDR control interface (to the left)

Here is the DDR vs. DDR2 vs. DDR3 vs. DDR4 comparison table:

| DDR SDRAM Standard | Internalrate (MHz) | Bus clock(MHz) | Prefetch | Data rate(MT/s) | Transfer rate(GB/s) | Voltage(V) |

| DDR | 133-200 | 133-200 | 2n | 266-400 | 2.1-3.2 | 2.5/2.6 |

| DDR2 | 133-200 | 266-400 | 4n | 533-800 | 4.2-6.4 | 1.8 |

| DDR3 | 133-200 | 533-800 | 8n | 1066-1600 | 8.5-14.9 | 1.35/1.5 |

| DDR4 | 133-200 | 1066-1600 | 8n | 2133-3200 | 17-21.3 | 1.2 |

A comparison of different generations of DDR SDRAM chips

SDRAM vs. DDR vs. DRAM Memory Comparison Table

| Feature | SDRAM | DDR (Double Data Rate) | DRAM (Dynamic RAM) |

|---|---|---|---|

| Speed | Lower | Higher | Fast |

| Latency | Higher | Lower | Low |

| Bandwidth | Moderate | Higher | High |

| Power Consumption | Lower | Higher | Moderate |

| Cost | Lower | Moderate | Higher |

| Compatibility | Limited | Widely Compatible | Limited |

| Scalability | Limited | High Scalability | Limited |

As you can see, the choice between SDRAM, DDR, and DRAM depends on the specific requirements of the application. SDRAM is suitable for budget-conscious applications, while DDR offers improved performance and bandwidth. DRAM, with its fast access times, is ideal for applications requiring real-time data processing.

Conclusion

In summary, SDRAM, DDR, and DRAM are essential memory types in computing systems, each with its own set of characteristics and advantages. SDRAM offers faster data access and transfer rates, DDR improves upon this by doubling the data transfer rate, and DRAM remains a cost-effective option for basic computing needs.

When choosing the right memory type for specific applications, it is crucial to consider factors such as performance requirements, budget constraints, and compatibility requirements to make an informed decision.

Read More

FAQ

-

What is better, SDRAM or DRAM?

Single Data Rate (SDR) SDRAM typically operates at clock rates of 100 and 133 MHz. A key distinction in SDRAM architectures is the division of memory into multiple sections of equal size. These memory banks can execute access commands concurrently, enabling significantly higher speeds compared to standard DRAM.

-

How do I know if I have SDRAM or DRAM?

To identify the type of RAM in your system, you can use a free tool like CPU-Z or HWiNFO64. These tools provide detailed information about your system. In CPU-Z, navigate to the Memory tab, where the first box will display the type of RAM installed.

-

Is DDR4 and DDR4 SDRAM the same thing?

DDR4, short for Double Data Rate Fourth Generation, represents a type of random access memory (RAM). More precisely, it is a form of SDRAM (synchronous dynamic random access memory), meaning its operations are synchronized with the clock speed the RAM is optimized for. DDR4 was introduced in 2014, with DDR5 following in November 2021.

-

What is the advantage of SDRAM?

SDRAM offers faster data transfer rates because of its synchronous nature. It also boasts higher bandwidth, enabling the simultaneous transfer of more data. Moreover, SDRAM consumes less power than its predecessor, dynamic random-access memory (DRAM).

-

Can you mix DDR4 and DDR5 RAM?

DDR5 RAM has a different pin layout than DDR4 RAM. While both modules have key notches to lock them in place in the motherboard's DIMM slot, the pin layout on a DDR5 RAM module differs from that of a DDR4 module.

-

Is DDR4 SDRAM good for gaming?

Computers utilize SDRAM, with DDR4 RAM starting at around 1600MHz, though these speeds are now considered slow. Higher-rated RAM speeds offer advantages for gaming, improving game performance and frame rates, although less significantly than upgrading the processor or graphics card. Over time, SDRAM has improved, offering benefits such as lower power consumption, faster transfer rates, and more stable data transmission.

Prof. David Reynolds

Prof. David Reynolds

Still, need help? Contact Us: [email protected]